Exciting new series on “Voice, Body and Movement for Lawyers – How to connect with the jury and find Justice Through Dramatic Technique!”

Click here to find out more

Artificial intelligence is being used more and more in everyday life. Unfortunately, where we have good actors, we also have bad actors. Fraudsters can be used in a multiple of ways. Whether it’s to clone voices, alter images and create fake videos to spread false or misleading information. These are some of the risk we need to deal with in today’s world. With AI, we need to understand the risks and opportunities it presents. The risk can be high, but prevention is the key. With every technology, there can be element of good and evil. Within financial services. AI can be applied to identify unusual activity, show inconsistent data, remove manual efforts, improve collaboration, and offer a quick and efficient means to review vast amounts of information.

During this webinar, we will discuss the risk and opportunities of today’s world, how fraudsters are using AI and how legitimate companies use it to their advantage

Overview of AI

• The risks that are out there

• The opportunities AI presents

• How we protect ourselves as an organization

• Future

• Q & A

This dynamic and compelling presentation explores how chronic stress, sleep deprivation, and substan...

This course breaks down GAAP’s ten foundational principles and explores their compliance impli...

The “Chaptering Your Cross” program explains how dividing a cross?examination into clear...

This session highlights the legal and compliance implications of divergences between GAAP and IFRS. ...

This CLE program covers the most recent changes affecting IRS information reporting, with emphasis o...

Part 2 dives deeper into advanced cross?examination techniques, teaching attorneys how to maintain c...

This course clarifies the distinction between profit and cash flow from a legal perspective. Attorne...

This program explores listening as a foundational yet under-taught lawyering skill that directly imp...

Loneliness isn’t just a personal issue; it’s a silent epidemic in the legal profession t...

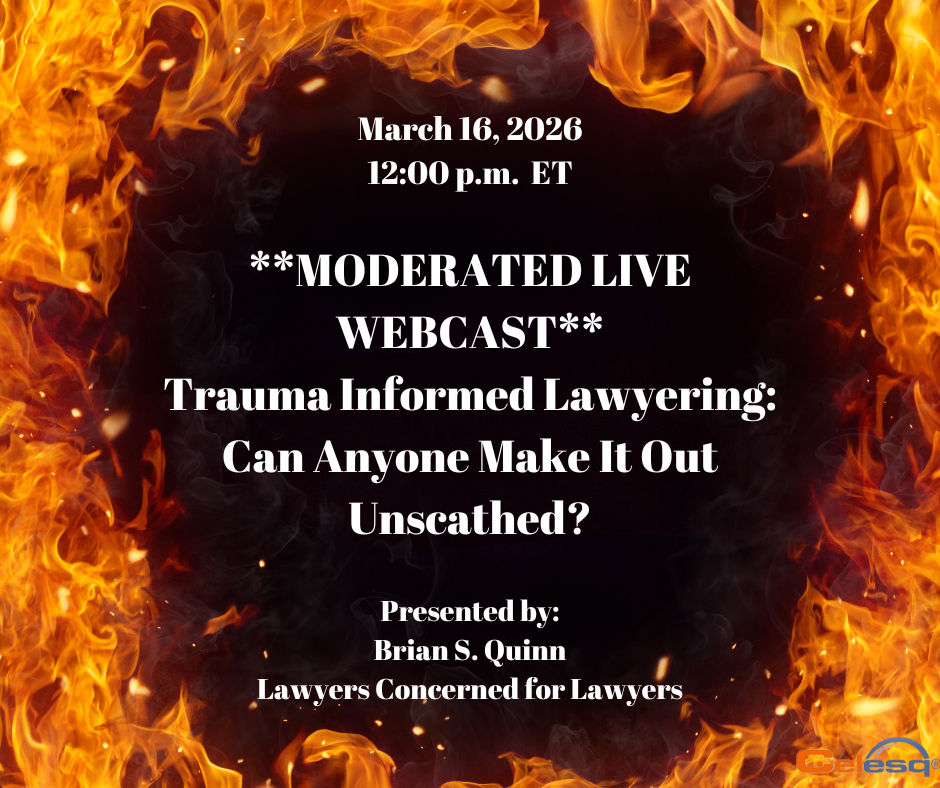

Attorneys hopefully recognize that, like many other professionals, their lives are filled to the bri...